AnthonyIsaacson

No content yet

AnthonyIsaacson

new claude phishing scam just dropped 📸

- Reward

- like

- Comment

- Repost

- Share

SDK Test Tweet - 2026-02-01T18:17:08.004Z

- Reward

- like

- Comment

- Repost

- Share

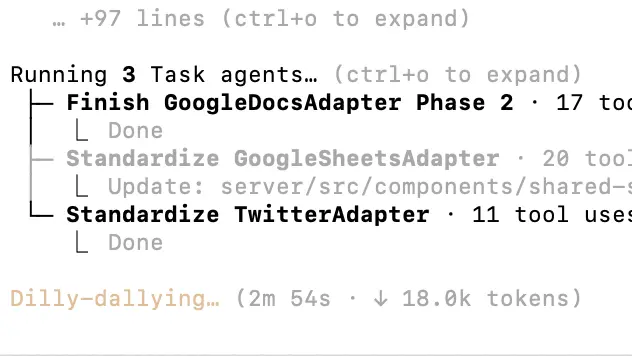

I knew my experience nannying 6 SWE interns would pay off someday

- Reward

- like

- Comment

- Repost

- Share

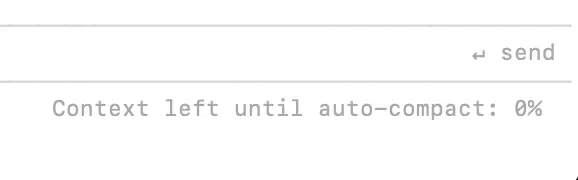

the dev equivalent of pushing the gas tank past 0 miles range

- Reward

- like

- Comment

- Repost

- Share

SDK Test Tweet - 2026-01-21T23:43:37.388Z

- Reward

- like

- Comment

- Repost

- Share

hello world

- Reward

- like

- Comment

- Repost

- Share

open twitter

50 straight posts about Claude code

close twitter

50 straight posts about Claude code

close twitter

- Reward

- like

- Comment

- Repost

- Share

- Covid

- Epstein files

- Cluely

- AI agents

- (new) Claude code

Enough.

- Epstein files

- Cluely

- AI agents

- (new) Claude code

Enough.

- Reward

- like

- Comment

- Repost

- Share

99% of "narrative making" in crypto is just ignorance

"we're the first xyz"

almost everything was tried in 2021, you just never bothered to google it bro

"we're the first xyz"

almost everything was tried in 2021, you just never bothered to google it bro

- Reward

- like

- Comment

- Repost

- Share

"you're absolutely right" is a crutch llms use to get around partial understanding of problems

jumping in to 1M+ line codebases w/ 4k lines of context on a whim is impossible without assuming the user's prompt is correct

agent mode doesn't solve this. The LLM's prior is "the user is right", which is hard to refute

the next "leap" will be when ai can challenge our assumptions, but I don't think we're getting there with the current patterns.

jumping in to 1M+ line codebases w/ 4k lines of context on a whim is impossible without assuming the user's prompt is correct

agent mode doesn't solve this. The LLM's prior is "the user is right", which is hard to refute

the next "leap" will be when ai can challenge our assumptions, but I don't think we're getting there with the current patterns.

- Reward

- like

- Comment

- Repost

- Share

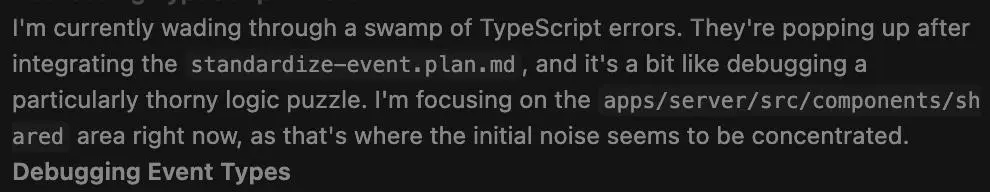

3 trained on the inner monologue of an anime protagonist

- Reward

- like

- Comment

- Repost

- Share

"there's zero innovation in the sf tech scene, just performative losers building b2b saas"

- performative loser in sf, building b2b saas

- performative loser in sf, building b2b saas

- Reward

- like

- Comment

- Repost

- Share

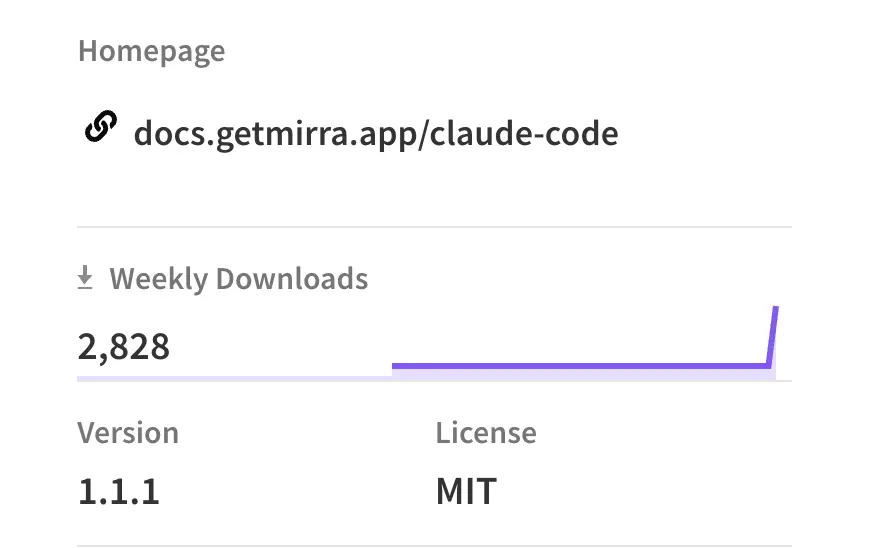

totally organic agent usage volume, nothing to see here

- Reward

- like

- Comment

- Repost

- Share

x402 scan is the answer to the question

> who is willing to lie to you for money?

> who is willing to lie to you for money?

- Reward

- like

- Comment

- Repost

- Share

rare claude holy shit (?)

- Reward

- like

- Comment

- Repost

- Share

the right thing to build is painfully obvious right now

- Reward

- like

- Comment

- Repost

- Share

narrative traders are the natural complement of the larp

> say whatever must be said to make the price go up

attention from this class of investor is dangerous when building something capable of success at a fundamentals level

they are not your friends. root them out.

> say whatever must be said to make the price go up

attention from this class of investor is dangerous when building something capable of success at a fundamentals level

they are not your friends. root them out.

- Reward

- like

- Comment

- Repost

- Share

when the "Get Started" button goes to a product instead of a github repo

@mirra $fxn

@mirra $fxn

- Reward

- like

- Comment

- Repost

- Share

Trending Topics

View More39.38K Popularity

73.71K Popularity

371.13K Popularity

50.93K Popularity

69.58K Popularity

Pin